Redlines (i.e. strike-throughs and underlines that indicate a change from the previous version) are a common way to show the changes between versions of a document, but, unless you tell an LLM where those redlines are, the text appears as be a confusing mess to the LLM. Extracting the exact location of the redlines in PDFs turns out to be harder than I thought, and so I’m sharing my approach here.

Base Approach

Redline documents usually originate from MS Word docx files with track changes enabled that are then exported to PDF. In these files, strike-throughs and underlines (referred to as “revisions” in the rest of the post) that indicate edits aren’t part of the text objects; they’re “drawing” elements like rectangles or lines.

Sample Text Object

{

"x0": 154.04881286621094,

"y0": 418.4966735839844,

"x1": 186.7164306640625,

"y1": 431.9029235839844,

"text": "MCPs",

"block_number": 2,

"line_number": 20,

"word_number": 2

}Sample Drawing Object

{

"type": "f",

"even_odd": false,

"fill_opacity": 1.0,

"fill": [0.0, 0.4690000116825104, 0.8320000171661377], // red component, green component, blue component

"rect": {

"x0": 147.36000061035156,

"y0": 426.239990234375,

"x1": 488.03997802734375,

"y1": 426.8399963378906

},

"seqno": 9,

"layer": "",

"closePath": null,

"color": null,

"width": null,

"lineCap": null,

"lineJoin": null,

"dashes": null,

"stroke_opacity": null

}

To associate these revision drawings with their corresponding text, one approach is to detect overlaps between the the drawing and the relevant text. Strike-through lines typically cross through the middle of the text they modify, while underlines appear just below the text baseline. These overlaps aren’t exact - due to variations in font sizes, kerning, and rendering - so, when calculating intersections, you need to include a tolerance to accommodate slight misalignments.

def annotation_check(

text_object: dict, drawing_object: dict, annotation_epsilon: float = 10

) -> "strikethrough" | "underline":

if text_object["y0"] + annotation_epsilon >= drawing_object["y0"]:

return "strikethrough"

else:

return "underline"

def x_overlap(rectangle_object: dict, other_rectangle: dict) -> float:

return (

max(0, min(rectangle_object["x1"], other_rectangle["x1"]) - max(rectangle_object["x0"], other_rectangle["x0"]))

/ (rectangle_object["x1"] - rectangle_object["x0"])

)

def same_word_check(

text_object: dict, drawing_object: dict, word_epsilon: float = 1, overlap_threshold: float = 0.01

) -> bool:

end = True

word_start = text_object["x0"] >= drawing_object["rect"]["x0"] - word_epsilon

word_end = text_object["x1"] <= drawing_object["rect"]["x1"] + word_epsilon

if word_start and not word_end:

word_end = (drawing_object["rect"]["x1"] <= text_object["x1"]) and (

drawing_object["rect"]["x1"] >= text_object["x0"]

)

end = False

elif not word_start and word_end:

word_start = (drawing_object["rect"]["x0"] >= text_object["x0"]) and (

drawing_object["rect"]["x0"] <= text_object["x1"]

)

if word_start and word_end:

overlap = x_overlap(drawing_object["rect"], text_object)

if overlap < overlap_threshold:

word_start = False

word_end = False

elif not word_start and not word_end:

overlap = x_overlap(drawing_object["rect"], text_object)

if overlap > overlap_threshold:

word_start = True

word_end = True

return word_start and word_end

def same_line_check(

text_object: dict, drawing_object: dict, line_point_epsilon: float = 2

) -> bool:

line_point = drawing_object["rect"]["y0"] - line_point_epsilon

same_line = (

text_object["y0"] <= line_point and text_object["y1"] >= line_point

)

return same_line

def get_redline_annotation(text_object: dict, drawing_object: dict) -> "strikethrough" | "underline" | None:

if same_line_check(text_object, drawing_object) and same_word_check(text_object, drawing_object):

return annotation_check(text_object, drawing_object)

return NoneChallenge 1: Non-Redline Intersections

One significant challenge is the presence of other rectangles and lines in the document that aren’t related to revisions — such as those used for hyperlinks, tables, etc. These additional drawings can interfere with the detection process, leading to false positives when trying to mark the text objects that have changes.

Color can be a helpful indicator to differentiate the revisions from other drawings, especially if you know the specific color encoding used for revisions in the document. By filtering drawings based on color, you can reduce the noise and focus on the shapes that likely represent revisions.

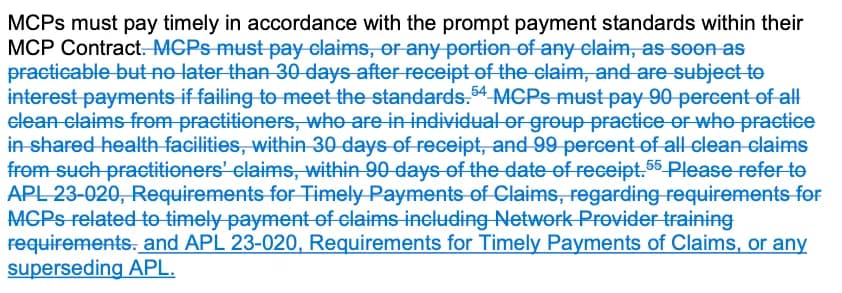

However, relying solely on color isn’t foolproof. Sometimes, revisions share the same color as other drawings — for example, the blue commonly used for hyperlinks (see image below, a small part of the hyperlink is redlined, can you spot it?). In such cases, a simple color filter won’t suffice. One more involved but also potentially more robust approach is to train a computer vision model to specifically classify whether a given drawing is a revision or not.

![]()

Challenge 2: Text Overlaps

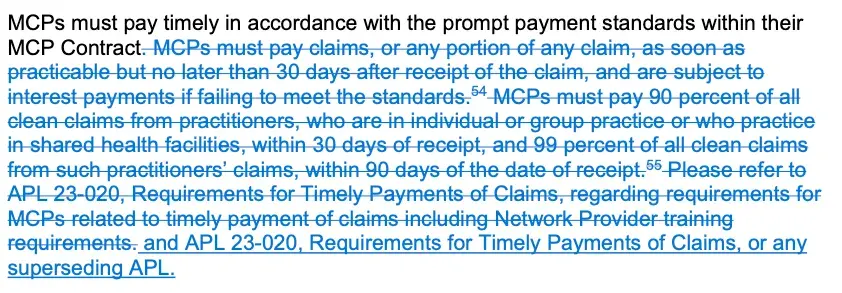

Another significant challenge arises when the PDF encodes multiple words as a single text object without spaces. This can be particularly problematic in the case of revisions, where a portion of that text object may be struck-through and the other part is underlined (in the image, below, the pdf represents this as one word - “(TAR)Form”). Since the PDFs I dealt with didn’t provide individual character positions, determining exactly which characters are affected by revisions is not straightforward.

![]()

One approach is to assume that each character within the text object has the same width. By using the total width of the text object, you can estimate the position of each character and correlate them with the coordinates of the revision drawings. However, this assumption often fails in practice due to the varying widths of characters in fonts.

A more robust method is to crop an image of the PDF based on the coordinates of the revision drawing and then use an OCR system, such as AWS Textract, to extract the portion of text associated with that annotation. While this approach does yield better results, OCR systems are not perfect and can introduce their own issues (e.g. transcribing “it’s” as “izs”), affecting the overall accuracy of the parsing process. OCR robustness can be potentially improved by using an ensemble of OCR systems.

def get_page_as_bytes(

page: pymupdf.Page, crop_area: Optional[pymupdf.Rect], zoom: int = 1

) -> bytes:

# Set the zoom factor for higher resolution

matrix = pymupdf.Matrix(zoom, zoom)

# Render page to an cropped image

pix = page.get_pixmap(matrix=matrix, clip=crop_area)

# Convert pixmap to PNG

img_bytes = pix.tobytes("png")

return img_bytes

def get_chunk_text(

rectangles: list[tuple["strikethrough" | "underline" | "empty", dict]], page: pymupdf.Page, text_object: dict, text_object_overlap_threshold: float = 0.95, zoom_scale_factor: int = 5

) -> tuple[list[str], list[tuple["strikethrough" | "underline" | "empty", dict]]]:

pymupdf_rectangles: list[pymupdf.Rect] = []

# loop through all the drawing rectangles, and create a pymupdf rectangle for each

for rectangle in rectangles:

# create a pymupdf rectangle for the current drawing rectangle, but only for the portion that overlaps with the text object

pymupdf_rectangle = pymupdf.Rect(

x0=max(rectangle[1]["rect"]["x0"], text_object["x0"]),

y0=text_object["y0"],

x1=min(rectangle[1]["rect"]["x1"], text_object["x1"]),

y1=text_object["y1"],

)

# handle text objects that have no overlap with the current drawing rectangle

if int(pymupdf_rectangle.x0) == int(pymupdf_rectangle.x1):

pymupdf_rectangle = pymupdf.Rect(

x0=text_object["x0"],

y0=text_object["y0"],

x1=text_object["x1"],

y1=text_object["y1"],

)

pymupdf_rectangles.append(pymupdf_rectangle)

# check if the cropped rectangles only partially overlap with the text object

consolidated_rectangle = {

"x0": min([rectangle.x0 for rectangle in pymupdf_rectangles]),

"y0": min([rectangle.y0 for rectangle in pymupdf_rectangles]),

"x1": max([rectangle.x1 for rectangle in pymupdf_rectangles]),

"y1": max([rectangle.y1 for rectangle in pymupdf_rectangles]),

}

text_object_overlap = x_overlap(text_object, consolidated_rectangle)

# if the cropped rectangles only partially overlap with the text object, then we need to add a new rectangle or two for the missing portion

if text_object_overlap < text_object_overlap_threshold:

new_active_rectangle = (

"empty",

consolidated_rectangle,

)

# check if missing chunk is at start of text_object

if text_object["x0"] < consolidated_rectangle["x0"] - 1:

pymupdf_rectangles.insert(

0,

pymupdf.Rect(

x0=text_object["x0"],

y0=text_object["y0"],

x1=consolidated_rectangle["x0"],

y1=text_object["y1"],

),

)

rectangles.insert(

0,

new_active_rectangle,

)

# check if missing chunk is at end of text_object

if word.rectangle.x1 > consolidated_rectangle.x1 + 1:

pymupdf_rectangles.append(

pymupdf.Rect(

x0=consolidated_rectangle["x1"],

y0=text_object["y0"],

x1=text_object["x1"],

y1=text_object["y1"],

),

)

rectangles.append(new_active_rectangle)

# get the images for each of the cropped rectangles

images = [

get_page_as_bytes(page, crop_area=rectangle, zoom=zoom_scale_factor)

for rectangle in pymupdf_rectangles

]

# ocr the images

raw_output = [get_textract(img_bytes) for img_bytes in images]

# combine the ocr results for each of the cropped rectangles

output = []

for text_response in raw_output:

# concatenate all the words in the ocr response separated by spaces

potential_text = " ".join(

[

block["Text"]

for block in text_response["Blocks"]

if block["BlockType"] == "WORD"

]

)

# if the entire ocr text isn't in the original text object, then we need to check if the ocr text without spaces is in the original text object

if potential_text not in text_object["text"]:

if potential_text.replace(" ", "") in text_object["text"]:

potential_text = potential_text.replace(" ", "")

else:

# if the ocr text without spaces isn't in the original text object, then we need to check if any of the words in the ocr text are in the original text object

# and greedily take the first match - this is not foolproof but seems to work well in practice

for item in potential_text.split(" "):

if item in text_object["text"]:

potential_text = item

break

output.append(potential_text)

return output, rectanglesOutput

Once you figure out which text objects are associated with which revision drawings, there are a number of ways to represent the results. One simple way is using html tags to represent the revisions.

MCPs must pay timely in accordance ... Contract.<strikethrough> MCPs must pay claims,

or any portion ... training requirements.</strikethrough><underline>and APL 23-020,

Requirements ... superseding APL.</underline>This can be passed to an LLM for further analysis in a way that is easier for the LLM to understand where the actual changes are.

What about just throwing an LLM at this?

I tried to pass this to a multi-modal LLM (gpt 4o and claude 3.5 sonnet), but the results were at best inconsistent and mostly unusable. I think this might be due to the loss of information as images are downscaled and pre-processed before being passed to the LLM, but I’m not entirely sure. If you do know why this is happening, please let me know!